Output_layer= Dense(1, activation="linear")(Layer_2) Layer_2 = Dense(4, activation="relu")(Layer_1) Layer_1 = Dense(4, activation="relu")(input_layer) #Import the librariesįrom import Modelįrom import Input,Dense # Creating the layers

KERAS SEQUENTIAL CODE

The code below will give you better clarity. Then put the previous layer in a set of parentheses.You define a layer by giving it a name, specifying the number of neurons, activation function, etc.Here you define an input layer and specify the input size= Input(shape= ).It automatically recognizes the input shape. You don’t have to specify a separate input layer in the sequential API. pile(optimizer='adam',loss='mse') # training the model Model.add(Dense(1)) # defining the optimiser and loss function

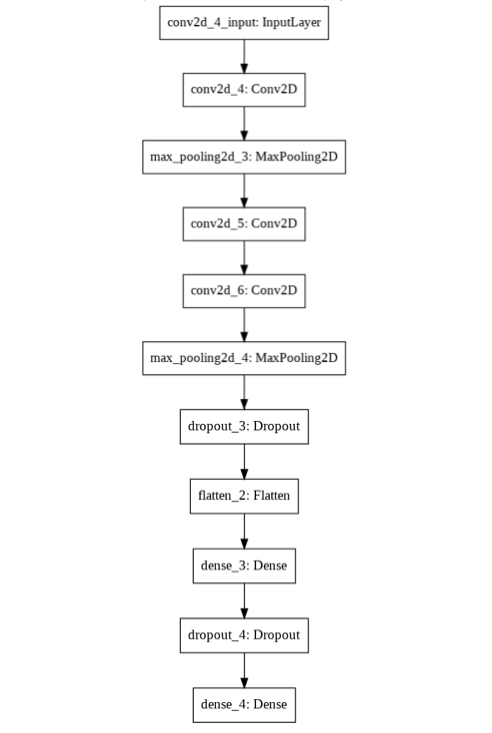

Now let’s proceed to build the neural network using both the APIs for the above network A.Using Sequential API #Import the libraries from import Sequentialįrom import Dense, Activationįrom import Adam # Creating the model model = Sequential() model.add(Dense(4,activation='relu')) #<- You don't have to specify input size.Just define the hidden layers Let’s say we want to build a neural network that has 3 inputs (x1,x2,x3), 2 hidden layers with 4 neurons each and one output y. We will focus more on the functional API as it is helpful to build more complex models. We will use a toy neural network for better understanding and visualization and then will try to understand using the codes and apply it to a real use case. So which one to use? In this article, we will try to understand the two different architectures and which one to use when. And also it can have multiple inputs and outputs. It is more powerful than the sequential API in the sense branching or sharing of layers is allowed here. It is more flexible than the sequential API. Also, you can’t have multiple inputs or outputs. But sharing of layers or branching of layers is not allowed (We will see what sharing or branching means later). They are as follows: 1.using Sequential API Keras offers two ways to build neural networks. Luckily we have Keras, a deep learning API written in Python running on top of Tensorflow, that makes our work of building complex neural networks much easier.

Neural networks are very complex in architecture and computationally expensive. Neural networks with many layers are referred to as deep learning systems. Each layer is made of a certain number of nodes or neurons. A basic neural network consists of an input layer, a hidden layer and an output layer. Inspired by how human brains work, these computational systems learn a relationship between complex and often non-linear inputs and outputs.

Neural networks play an important role in machine learning. This article was published as a part of the Data Science Blogathon Introduction

0 kommentar(er)

0 kommentar(er)